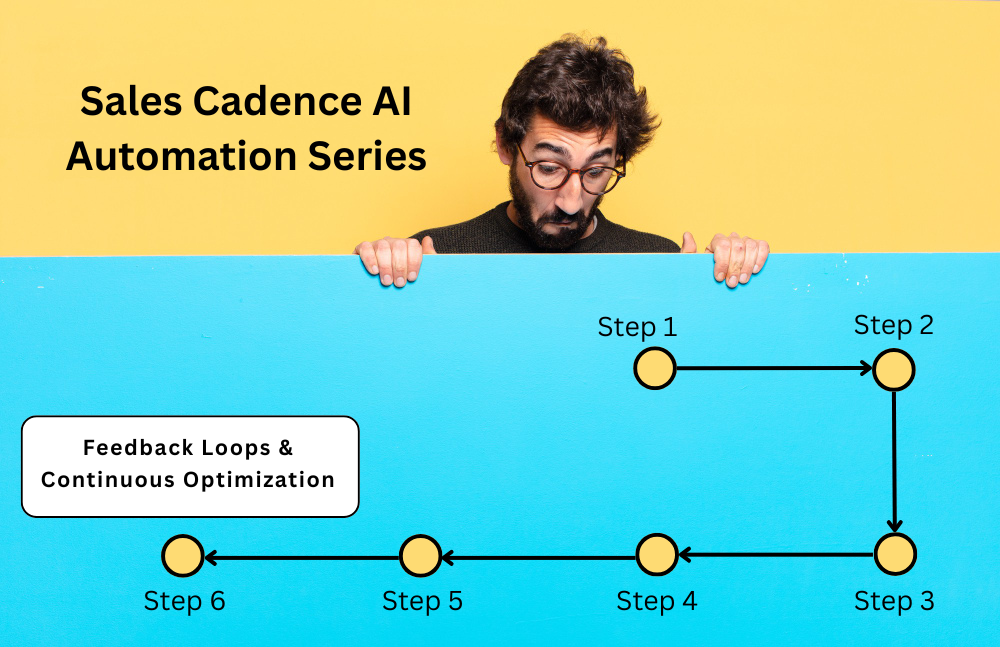

You’ve done it.

Over the last five parts, you’ve designed and built an end-to-end system for AI-driven sales outreach. One that segments leads with precision, validates contact data, generates personalized messaging, delivers it across multiple channels, and scores lead behavior based on actual engagement.

But even the best-built system—if left untouched—will degrade over time. Markets change. Messaging decays. What worked last month may not work next quarter. People stop responding to certain phrasing, tech gets commoditized, tone expectations evolve, and your audience matures.

That’s why the most important final piece of your system is the feedback loop. The process that allows your automation to learn, adapt, and improve—without starting over.

In this final part, we’ll walk through how to close the loop between performance and generation—feeding real-world data back into GPT, adjusting prompts based on response behavior, tracking what works, and turning outreach into a living, learning system.

Step 1: Capture and Structure Post-Send Data

Optimization doesn’t start with assumptions. It starts with structured insights from real activity. If you’ve followed Part 4 and 5 properly, you’re already tracking which messages were sent, opened, clicked, replied to, or ignored. Now, it’s time to make that data usable.

Your system should log, per message:

- The prompt used

- The channel used

- The exact message version (store GPT output verbatim)

- Whether it was delivered successfully

- Whether it was opened or clicked

- Whether it got a reply, and if yes—what kind of reply

- If a reply led to a meeting or opportunity

If you’re working out of Airtable, Notion, or your CRM, make sure these are structured fields—not just free text. If you’re logging this in a custom database, even better—you can run queries and score historical performance with ease.

Each record now becomes a learning sample—an example of what worked (or didn’t), with traceability back to the exact prompt and channel strategy used.

Step 2: Classify Outcomes with AI

While basic metrics like “reply = success” and “no open = failure” can give you directional insight, real learning comes from understanding the content and tone of replies.

This is where GPT (or Claude) moves from generator to interpreter.

You can use prompt-based classification agents to read responses and assign tags such as:

- Positive response: high intent

- Neutral response: polite but passive

- Negative response: rejection or unsubscribe

- Objection: price, timing, need clarity

- Referral: passed to someone else

- Ambiguous: unclear meaning

Prompt example:

“Classify the following sales reply into one of these buckets: Positive, Neutral, Negative, Objection, Referral, Ambiguous. Keep response short.”

This allows you to see which message types led to which kinds of responses, and whether positive tone actually correlated with bookings—or if a “neutral” reply led to a closed deal 3 weeks later.

Feed these classifications back into your message logs, so every line of copy you generated has a performance label.

Step 3: Measure Prompt and Sequence Performance

Now that replies are tagged and logs are centralized, you can start analyzing:

- Which prompt version had the best positive reply rate?

- Which sequences produced most booked calls?

- Which subject lines were ignored entirely?

- Which LinkedIn messages resulted in a connect but no DM open?

These insights should inform:

- Which messages to retain and scale

- Which to pause or rewrite

- What kinds of phrases trigger high engagement vs. silence

You can automate some of this analysis using GPT-based evaluators or just good structured analytics with dashboards via Retool, Metabase, or embedded into your CRM.

If you’re running messaging through LangChain or Make, you can include a “prompt version” field in every record—allowing you to do version-to-outcome analysis across thousands of leads.

Step 4: Use Feedback to Rewrite Prompts and Improve Sequences

This is the payoff: now that you know what’s working and what’s not, you begin to rewrite not just the message—but the prompt that creates it.

Here’s where you create the loop.

When GPT-generated messages are classified as “ineffective” or “ignored,” identify patterns. Maybe your intro line is too formal. Maybe your CTA is too early. Maybe your subject lines are too vague.

Now, adjust the prompt:

Old:

“Write a 100-word cold email to a CTO who recently raised funding…”

New:

“Write a confident, short email to a Series A CTO who uses OpenAI tools, highlighting one customer story in under 80 words. End with a yes/no CTA.”

Do this per persona, per segment, per product line.

If you’re using LangChain, you can even run multiple prompt versions through A/B chains, compare performance automatically, and route future traffic to the better one.

In Make.com or n8n, you can manually rotate prompts every X leads, track reply rate, and decide which version wins.

This is no longer content testing. It’s prompt engineering with performance feedback.

Step 5: Continuously Evolve Messaging with Lead-Level Learning

Finally, as your system matures, you can start evolving not just your overall prompts—but messaging at the individual lead level.

For example:

- If a lead opens emails but never clicks, try a more curiosity-driven subject line

- If a lead replies with vague interest, send a content-led follow-up rather than a meeting CTA

- If a lead responded in Q1 with “not right now,” and you’re re-engaging in Q3—reference that thread

You can use GPT to generate “lead-aware” messaging by feeding past response history as context.

Prompt example:

“This lead responded 3 months ago with: ‘Thanks, we’re focused on onboarding right now, maybe later.’ Generate a follow-up that references this, introduces one relevant success story, and suggests a quick catch-up.”

This turns your outbound system into a relationship memory machine—something no human rep can do for thousands of leads at once.

Step 6: Building the Right Dashboard to See, Act, and Optimize

A system is only as good as what you can see from it. Without visibility, even the smartest GPT workflows become black boxes, and no one on your team knows what to prioritize, fix, or double down on.

This is why you need a centralized dashboard—not just for reporting, but for daily decision-making, debugging, and optimization.

The dashboard is not a vanity metrics board. It’s your control panel.

Let’s break it down into three views that matter.

1. Lead Activity View: Who’s Engaging and Why

This dashboard answers:

“Which leads are responding, engaging, or dropping off?”

Each row should represent a single lead and show:

- Current Score (from Part 5)

- Last message sent (timestamp + channel)

- Last action (open, click, reply, ignored)

- Sequence position (step 1, step 2, paused, completed)

- GPT-generated reply summary (e.g., “Neutral – Not ready until Q3”)

- Next action scheduled (with date and channel)

You can build this in:

- Retool for internal apps

- Airtable Interface Designer if you’re storing data in Airtable

- Notion databases with linked tables for founders or small teams

- Your CRM with embedded dashboards (e.g., HubSpot Custom Reports)

This view helps SDRs or founders see the top leads to follow up with, or spot drop-off trends.

2. Message Performance View: What’s Working

This dashboard is for optimization. It shows which messages, prompts, and sequences are actually performing.

You should track:

- Prompt version used

- Email subject line and preview text

- Channel sent (email, LinkedIn, WhatsApp)

- Positive reply rate

- Booking rate

- Objection or unsubscribe rate

- Time to first response

- GPT output quality scores (if used)

Use this to spot trends:

- Is Prompt V2 working better than V1?

- Are short DMs getting more replies than long ones?

- Is one sequence step causing drop-offs?

Store this in structured format and visualize with:

- Google Looker Studio

- Metabase

- [Data Studio + BigQuery] if you’re logging at high volume

- Or even Grafana for dev-heavy teams

This gives your RevOps or growth lead insight into what GPT outputs to scale or refine.

3. System Health View: What’s Failing (Silently)

This is your alert system. It helps you identify:

- Which leads didn’t get a message due to an error

- Which GPT outputs failed, timed out, or returned blank

- When a webhook didn’t log a click or open properly

- Which CRM syncs failed or caused mismatches

Essential fields:

- Lead ID / Email

- Error source (GPT, webhook, delivery, CRM)

- Last success timestamp

- Retry status or fail reason

- Manual override option

Use this view to:

- Debug outreach pipelines

- Surface missed opportunities

- Alert your ops or tech team for intervention

You can build this in the backend, or create a low-code panel using Retool, Appsmith, or even embed it inside Slack via custom workflows.

Making It Actionable: Who Uses Which View?

- Founders / Growth Heads: Use the Performance View weekly to evaluate strategy.

- SDRs: Use the Lead Activity View daily to prioritize effort.

- Ops / Tech Teams: Use the System Health View to keep things clean and error-free.

- Everyone: Gets clarity. No one has to ask, “What happened to this lead?” or “Why are replies dropping?”

Think of it this way—GPT is your thinking engine. Sequencing is your delivery engine. Scoring is your prioritization engine.

And the dashboard? That’s your interface. The one place where strategy, operations, and outcomes meet. Where everyone sees what’s working, what’s failing, and what’s next.

Build this well, and you’ve built not just a system—but a sales OS your entire team can trust.

Final Thoughts: From Campaigns to Intelligence—You’ve Built a Sales System That Learns

What started as a discussion about automating sales outreach with GPT has now evolved into a complete, modular, AI-powered sales system.

In this final phase, we added the one element that separates good automation from truly scalable growth: a feedback loop that learns. You’ve now built a system that doesn’t just send messages—it observes, interprets, adapts, and improves over time.

Every outreach attempt is now a data point. Every reply, an insight. Every interaction, a prompt refinement opportunity. Whether it’s GPT classifying replies, scoring lead intent, or adjusting message tone, your system is no longer static. It evolves.

And just as importantly—you’ve made it visible. With centralized dashboards tracking message performance, lead behavior, prompt effectiveness, and system health, your team finally has the clarity it needs to act with confidence.

What you’ve built over this series isn’t just an automation setup.

It’s a Sales Intelligence Engine—built with AI, structured like a product, and designed to scale without guesswork.

You now have:

- A segmentation model that identifies who matters

- A validation layer that ensures accuracy

- A content engine that personalizes every touchpoint

- A delivery system that coordinates messages across channels

- A scoring mechanism that prioritizes based on behavior

- And a feedback loop that turns every outreach into learning

This is what modern outbound looks like when it’s done right.

Want to Implement This System in Your Stack?

If you’ve followed this series and want to bring it to life—whether with Airtable, Notion, HubSpot, LangChain, Make, or your custom stack—we can help you design it for your workflow, your tech team, or your SDRs.

Let’s talk. Or subscribe to the newsletter for updates, workshop invites, and new playbooks beyond this series.